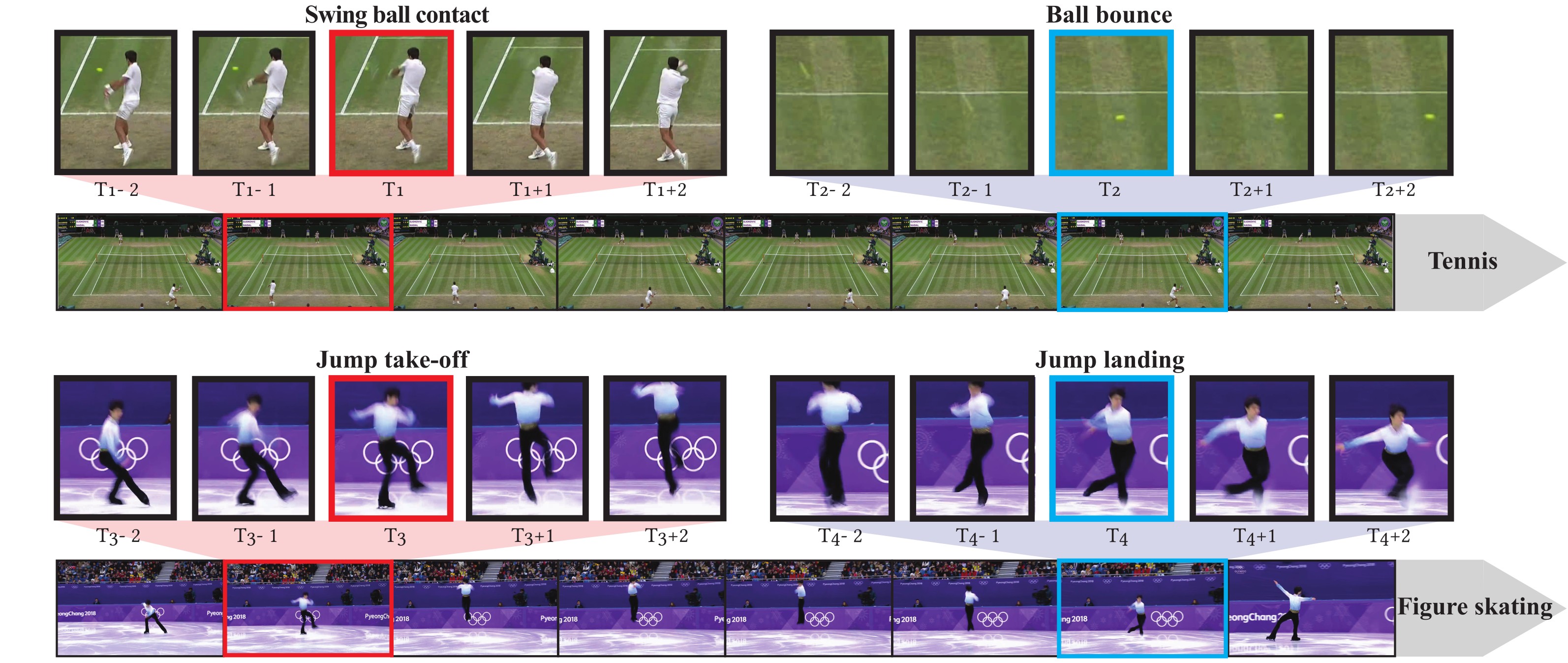

We introduce the task of spotting temporally precise, fine-grained events in video (detecting the precise moment in time events occur). Precise spotting requires models to reason globally about the full-time scale of actions and locally to identify subtle frame-to-frame appearance and motion differences that identify events during these actions. Surprisingly, we find that top performing solutions to prior video understanding tasks such as action detection and segmentation do not simultaneously meet both requirements.

In response, we propose E2E-Spot, a compact, end-to-end model that performs well on the precise spotting task and can be trained quickly on a single GPU. We demonstrate that E2E-Spot significantly outperforms recent baselines adapted from the video action detection, segmentation, and spotting literature to the precise spotting task. Finally, we contribute new annotations and splits to several fine-grained sports action datasets to make these datasets suitable for future work on precise spotting.

@inproceedings{precisespotting_eccv22,

author={Hong, James and Zhang, Haotian and Gharbi, Micha\"{e}l and Fisher, Matthew and Fatahalian, Kayvon},

title={Spotting Temporally Precise, Fine-Grained Events in Video},

booktitle={ECCV},

year={2022}

}

This work is supported by the National Science Foundation (NSF) under III-1908727, Intel Corporation, and Adobe Research.