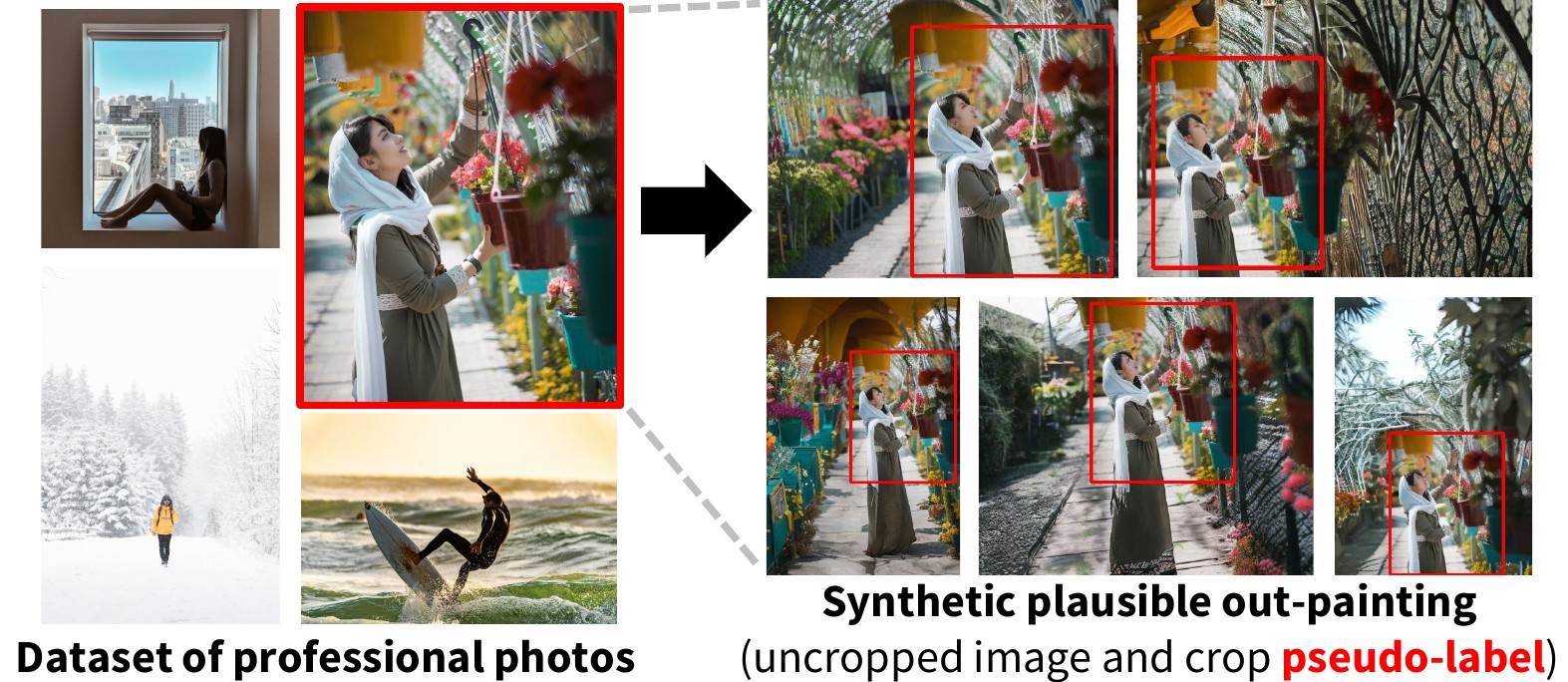

How to frame (or crop) a photo often depends on the image subject and its context; e.g., a human portrait. Recent works have defined the subject-aware image cropping task as a nuanced and practical version of image cropping. We propose a weakly-supervised approach (GenCrop) to learn what makes a high-quality, subject-aware crop from professional stock images. Unlike supervised prior work, GenCrop requires no new manual annotations beyond the existing stock image collection. The key challenge in learning from this data, however, is that the images are already cropped and we do not know what regions were removed. Our insight is to combine a library of stock images with a modern, pre-trained text-to-image diffusion model. The stock image collection provides diversity, and its images serve as pseudo-labels for a good crop. The text-image diffusion model is used to out-paint (i.e., outward in-painting) realistic, uncropped images. Using this procedure, we are able to automatically generate a large dataset of cropped-uncropped training pairs to train a cropping model. Despite being weakly-supervised, GenCrop is competitive with state-of-the-art supervised methods and significantly better than comparable weakly-supervised baselines on quantitative and qualitative evaluation metrics.

@misc{gencrop,

author={Hong, James and Yuan, Lu and Gharbi, Micha\"{e}l and Fisher, Matthew and Fatahalian, Kayvon},

title={Learning Subject-Aware Cropping by Outpainting Professional Photos},

booktitle={AAAI},

year={2024}

}

This work is supported by gifts from Meta and Adobe Research as well as computing support from Stanford HAI.